TSP stands for Travelling Sales Man problem, which minimises the cost of travelling through all the cities by minimising the distance travelled.

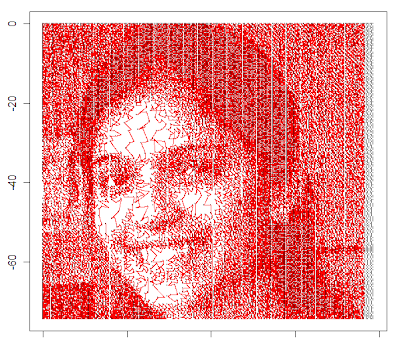

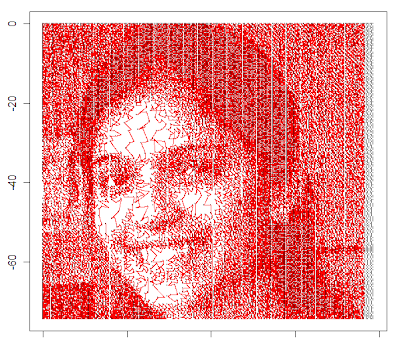

TSP art is essentially taking a picture and converting it into a scatterplot and then connecting the dots in scatterplot by lines to produce a not so smooth image, but aesthetically good one for sure.

Some examples of TSP art is

here

Some of the applications of this work could potentially be around making the images move - as in, eyes, arms, legs can be segmented once we take images and make them learn features to understand different body parts. Once this is done, an image can move as per one's wish!

Here's the workings of creating TSP art:

Step1: Converting image into scatterplot

1. Load image

2. Extract pixel values for each x & y points of the image

3. Extract pixel values of each x & y where the pixel value is greater than a certain threshold

and we have the scatterplot for an image

Step 2: create TSP while assumign the scatterplot points as cities

1. Assume each data point of the image as a city with x & y co-ordinates

2. Identify the path through which a salesman can go through each city while minimising the distance travelled

3. Plot the path

Here's the output of the above steps.

|

| TSP art |

The code is here:

import Image

image = Image.open("folder").convert("L")

pix=image.load()

outfile=open('folder','w')

for i in xrange(image.size[0]):

for j in xrange(image.size[1]):

outData='%s\t%s\t%s' % (i,j,data[i][j])

outfile.write(outData + '\n')

train <- read.table("folder",header=TRUE, sep=",", na.strings="NA", dec=".", strip.white=TRUE)

newtrain=train[train$rev_pix>150,]

plot(newtrain$x,-newtrain$y,data=newtrain)

library(geosphere)

library(TSP)

newtrain$pixel=NULL

newtrain$rev_pixel=NULL

newtrain=newtrain/3

newtrain2=newtrain

newtrain3=newtrain2[1:1000,]

d=dist(newtrain3)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path1 <- cut_tour(tour, "cut")

newtrain4=newtrain2[1001:2000,]

d=dist(newtrain4)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path2 <- cut_tour(tour, "cut")

newtrain5=newtrain2[2001:3000,]

d=dist(newtrain5)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path3 <- cut_tour(tour, "cut")

newtrain6=newtrain2[3001:4000,]

d=dist(newtrain6)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path4 <- cut_tour(tour, "cut")

newtrain7=newtrain2[4001:5000,]

d=dist(newtrain7)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path5 <- cut_tour(tour, "cut")

newtrain8=newtrain2[5001:6000,]

d=dist(newtrain8)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path6 <- cut_tour(tour, "cut")

newtrain9=newtrain2[6001:7000,]

d=dist(newtrain9)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path7 <- cut_tour(tour, "cut")

newtrain10=newtrain2[7001:8000,]

d=dist(newtrain10)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path8 <- cut_tour(tour, "cut")

newtrain11=newtrain2[8001:9000,]

d=dist(newtrain11)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path9 <- cut_tour(tour, "cut")

newtrain12=newtrain2[9001:10000,]

d=dist(newtrain12)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path10 <- cut_tour(tour, "cut")

newtrain13=newtrain2[10001:11000,]

d=dist(newtrain13)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path11 <- cut_tour(tour, "cut")

newtrain14=newtrain2[11001:12000,]

d=dist(newtrain14)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path12 <- cut_tour(tour, "cut")

newtrain15=newtrain2[12001:13000,]

d=dist(newtrain15)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path13 <- cut_tour(tour, "cut")

newtrain16=newtrain2[13001:14000,]

d=dist(newtrain16)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path14 <- cut_tour(tour, "cut")

newtrain17=newtrain2[14001:15000,]

d=dist(newtrain17)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path15 <- cut_tour(tour, "cut")

newtrain18=newtrain2[15001:16000,]

d=dist(newtrain18)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path16 <- cut_tour(tour, "cut")

newtrain19=newtrain2[16001:17000,]

d=dist(newtrain19)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path17 <- cut_tour(tour, "cut")

newtrain20=newtrain2[17001:18000,]

d=dist(newtrain20)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path18 <- cut_tour(tour, "cut")

newtrain21=newtrain2[18001:19000,]

d=dist(newtrain21)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path19 <- cut_tour(tour, "cut")

newtrain22=newtrain2[19001:20000,]

d=dist(newtrain22)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path20 <- cut_tour(tour, "cut")

newtrain23=newtrain2[20001:21000,]

d=dist(newtrain23)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path21 <- cut_tour(tour, "cut")

newtrain24=newtrain2[21001:22000,]

d=dist(newtrain24)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path22 <- cut_tour(tour, "cut")

newtrain25=newtrain2[22001:23000,]

d=dist(newtrain25)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path23 <- cut_tour(tour, "cut")

newtrain26=newtrain2[23001:24000,]

d=dist(newtrain26)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path24 <- cut_tour(tour, "cut")

newtrain27=newtrain2[24001:25000,]

d=dist(newtrain27)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path25 <- cut_tour(tour, "cut")

newtrain28=newtrain2[25001:26000,]

d=dist(newtrain28)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path26 <- cut_tour(tour, "cut")

newtrain29=newtrain2[26001:27000,]

d=dist(newtrain29)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path27 <- cut_tour(tour, "cut")

newtrain30=newtrain2[27001:28000,]

d=dist(newtrain30)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path28 <- cut_tour(tour, "cut")

newtrain31=newtrain2[28001:29000,]

d=dist(newtrain31)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path29 <- cut_tour(tour, "cut")

newtrain32=newtrain2[29001:30000,]

d=dist(newtrain32)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path30 <- cut_tour(tour, "cut")

newtrain33=newtrain2[30001:31000,]

d=dist(newtrain33)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path31 <- cut_tour(tour, "cut")

newtrain34=newtrain2[31001:32000,]

d=dist(newtrain34)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path32 <- cut_tour(tour, "cut")

newtrain35=newtrain2[32001:33000,]

d=dist(newtrain35)

tsp=TSP(d)

tsp <- insert_dummy(tsp, label = "cut")

tour <- solve_TSP(tsp, method = "nearest_insertion")

path33 <- cut_tour(tour, "cut")

plot(newtrain$x,-newtrain$y)

plot(newtrain$x,-newtrain$y,cex=0.25)

for(i in 1:(length(path1)-1)){

inter2=gcIntermediate(c(newtrain3$x[path1[[i]]],-newtrain3$y[path1[[i]]]),c(newtrain3$x[path1[[i+1]]],-newtrain3$y[path1[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path2)-1)){

inter2=gcIntermediate(c(newtrain4$x[path2[[i]]],-newtrain4$y[path2[[i]]]),c(newtrain4$x[path2[[i+1]]],-newtrain4$y[path2[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path3)-1)){

inter2=gcIntermediate(c(newtrain5$x[path3[[i]]],-newtrain5$y[path3[[i]]]),c(newtrain5$x[path3[[i+1]]],-newtrain5$y[path3[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path4)-1)){

inter2=gcIntermediate(c(newtrain6$x[path4[[i]]],-newtrain6$y[path4[[i]]]),c(newtrain6$x[path4[[i+1]]],-newtrain6$y[path4[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path5)-1)){

inter2=gcIntermediate(c(newtrain7$x[path5[[i]]],-newtrain7$y[path5[[i]]]),c(newtrain7$x[path5[[i+1]]],-newtrain7$y[path5[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path6)-1)){

inter2=gcIntermediate(c(newtrain8$x[path6[[i]]],-newtrain8$y[path6[[i]]]),c(newtrain8$x[path6[[i+1]]],-newtrain8$y[path6[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path7)-1)){

inter2=gcIntermediate(c(newtrain9$x[path7[[i]]],-newtrain9$y[path7[[i]]]),c(newtrain9$x[path7[[i+1]]],-newtrain9$y[path7[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path8)-1)){

inter2=gcIntermediate(c(newtrain10$x[path8[[i]]],-newtrain10$y[path8[[i]]]),c(newtrain10$x[path8[[i+1]]],-newtrain10$y[path8[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path9)-1)){

inter2=gcIntermediate(c(newtrain11$x[path9[[i]]],-newtrain11$y[path9[[i]]]),c(newtrain11$x[path9[[i+1]]],-newtrain11$y[path9[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path10)-1)){

inter2=gcIntermediate(c(newtrain12$x[path10[[i]]],-newtrain12$y[path10[[i]]]),c(newtrain12$x[path10[[i+1]]],-newtrain12$y[path10[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path11)-1)){

inter2=gcIntermediate(c(newtrain13$x[path11[[i]]],-newtrain13$y[path11[[i]]]),c(newtrain13$x[path11[[i+1]]],-newtrain13$y[path11[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path12)-1)){

inter2=gcIntermediate(c(newtrain14$x[path12[[i]]],-newtrain14$y[path12[[i]]]),c(newtrain14$x[path12[[i+1]]],-newtrain14$y[path12[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path13)-1)){

inter2=gcIntermediate(c(newtrain15$x[path13[[i]]],-newtrain15$y[path13[[i]]]),c(newtrain15$x[path13[[i+1]]],-newtrain15$y[path13[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path14)-1)){

inter2=gcIntermediate(c(newtrain16$x[path14[[i]]],-newtrain16$y[path14[[i]]]),c(newtrain16$x[path14[[i+1]]],-newtrain16$y[path14[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path15)-1)){

inter2=gcIntermediate(c(newtrain17$x[path15[[i]]],-newtrain17$y[path15[[i]]]),c(newtrain17$x[path15[[i+1]]],-newtrain17$y[path15[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path16)-1)){

inter2=gcIntermediate(c(newtrain18$x[path16[[i]]],-newtrain18$y[path16[[i]]]),c(newtrain18$x[path16[[i+1]]],-newtrain18$y[path16[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path17)-1)){

inter2=gcIntermediate(c(newtrain19$x[path17[[i]]],-newtrain19$y[path17[[i]]]),c(newtrain19$x[path17[[i+1]]],-newtrain19$y[path17[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path18)-1)){

inter2=gcIntermediate(c(newtrain20$x[path18[[i]]],-newtrain20$y[path18[[i]]]),c(newtrain20$x[path18[[i+1]]],-newtrain20$y[path18[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path19)-1)){

inter2=gcIntermediate(c(newtrain21$x[path19[[i]]],-newtrain21$y[path19[[i]]]),c(newtrain21$x[path19[[i+1]]],-newtrain21$y[path19[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path20)-1)){

inter2=gcIntermediate(c(newtrain22$x[path20[[i]]],-newtrain22$y[path20[[i]]]),c(newtrain22$x[path20[[i+1]]],-newtrain22$y[path20[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path21)-1)){

inter2=gcIntermediate(c(newtrain23$x[path21[[i]]],-newtrain23$y[path21[[i]]]),c(newtrain23$x[path21[[i+1]]],-newtrain23$y[path21[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path22)-1)){

inter2=gcIntermediate(c(newtrain24$x[path22[[i]]],-newtrain24$y[path22[[i]]]),c(newtrain24$x[path22[[i+1]]],-newtrain24$y[path22[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path23)-1)){

inter2=gcIntermediate(c(newtrain25$x[path23[[i]]],-newtrain25$y[path23[[i]]]),c(newtrain25$x[path23[[i+1]]],-newtrain25$y[path23[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path24)-1)){

inter2=gcIntermediate(c(newtrain26$x[path24[[i]]],-newtrain26$y[path24[[i]]]),c(newtrain26$x[path24[[i+1]]],-newtrain26$y[path24[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path25)-1)){

inter2=gcIntermediate(c(newtrain27$x[path25[[i]]],-newtrain27$y[path25[[i]]]),c(newtrain27$x[path25[[i+1]]],-newtrain27$y[path25[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path26)-1)){

inter2=gcIntermediate(c(newtrain28$x[path26[[i]]],-newtrain28$y[path26[[i]]]),c(newtrain28$x[path26[[i+1]]],-newtrain28$y[path26[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path27)-1)){

inter2=gcIntermediate(c(newtrain29$x[path27[[i]]],-newtrain29$y[path27[[i]]]),c(newtrain29$x[path27[[i+1]]],-newtrain29$y[path27[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path28)-1)){

inter2=gcIntermediate(c(newtrain30$x[path28[[i]]],-newtrain30$y[path28[[i]]]),c(newtrain30$x[path28[[i+1]]],-newtrain30$y[path28[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path29)-1)){

inter2=gcIntermediate(c(newtrain31$x[path29[[i]]],-newtrain31$y[path29[[i]]]),c(newtrain31$x[path29[[i+1]]],-newtrain31$y[path29[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path30)-1)){

inter2=gcIntermediate(c(newtrain32$x[path30[[i]]],-newtrain32$y[path30[[i]]]),c(newtrain32$x[path30[[i+1]]],-newtrain32$y[path30[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path31)-1)){

inter2=gcIntermediate(c(newtrain33$x[path31[[i]]],-newtrain33$y[path31[[i]]]),c(newtrain33$x[path31[[i+1]]],-newtrain33$y[path31[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path32)-1)){

inter2=gcIntermediate(c(newtrain34$x[path32[[i]]],-newtrain34$y[path32[[i]]]),c(newtrain34$x[path32[[i+1]]],-newtrain34$y[path32[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

for(i in 1:(length(path33)-1)){

inter2=gcIntermediate(c(newtrain35$x[path33[[i]]],-newtrain35$y[path33[[i]]]),c(newtrain35$x[path33[[i+1]]],-newtrain35$y[path33[[i+1]]]))

lines(inter2, col="red")

Sys.sleep(0.01)

}

2. Character

2. Character